The Age of Intelligent Machines

Artificial Intelligence (AI) is no longer a futuristic dream — it’s already shaping how we work, learn, shop, and live. From smart assistants to AI-powered predictions, machines are quietly becoming decision-makers in our daily lives.

But as AI systems grow more powerful, a critical question arises — can we trust them?

Understanding how AI makes decisions, who controls it, and how transparent those systems are is the foundation for a future where technology serves humanity, not the other way around.

Artificial Intelligence (AI) is no longer a futuristic concept. It’s quietly shaping how we work, learn, shop, travel, and even make personal decisions. From voice assistants to content generators and predictive algorithms, AI systems have become the invisible hands guiding much of modern life.

As the world rapidly transforms through these intelligent systems, our sense of progress — even time itself — feels different. You can explore this deeper in our related article, “We’re Living in a Rapidly Evolving AI World Where Even Time Has Lost Its Rhythm.”

What Transparency in AI Really Means

“Transparency” in AI means knowing how and why a system makes its decisions.

Today’s deep learning and large language models act like black boxes — they learn from massive datasets and produce results, but even experts can’t always explain how those results are formed.

Think about it:

- Why did an AI recommend a specific medical treatment?

- Why did a hiring algorithm reject a candidate?

- Why did an AI-powered news feed highlight certain stories?

Without understanding these “whys,” users can’t fully trust AI.

True transparency means:

✅ Knowing what data trained the AI

✅ Understanding how it makes decisions

✅ Being able to audit and question its outcomes

Only when these layers are open can AI earn genuine trust.

The “Black Box” Problem: When Machines Think Without Explaining

AI systems don’t think like humans — they recognize patterns, not reasons.

That’s powerful, but also risky.

For example:

- A facial recognition AI might work well for one demographic but fail for another.

- A content moderation tool might flag harmless posts because it misunderstands cultural context.

This is known as the black box problem — we know the input and the output, but not what happens inside.

To solve this, researchers are building Explainable AI (XAI) — systems that not only give answers but also explain the reasoning behind them.

Example: Instead of saying “Loan denied,” a transparent AI would say:

“Loan denied due to insufficient credit history and low repayment capacity.”

That’s trust through clarity — and it’s the future of responsible AI.

Why Human Control Still Matters

AI can write, diagnose, design, and even compose — but it should never replace human judgment.

The idea of “human-in-the-loop” (HITL) ensures that important AI decisions — in medicine, finance, hiring, or law — are always reviewed by real people.

Imagine a hospital AI detecting a rare illness. The AI might flag the anomaly, but a doctor must interpret it — applying ethics, empathy, and expertise.

Human control isn’t about limiting AI — it’s about keeping humans in command.

When people guide AI, machines become collaborators — not replacements.

Why Trust in AI Is a Global Priority

Trust is the bridge between humans and machines. Without it, even the most advanced AI won’t be accepted.

Globally, people feel both excited and uneasy about AI:

- Some see it as a way to boost productivity and innovation.

- Others fear bias, misinformation, or job loss.

To build trust, AI must be:

✅ Transparent – Users know how it works and what data it uses

✅ Accountable – There’s responsibility for mistakes

✅ Ethical – AI aligns with human values and fairness

When people understand AI’s purpose and limits, confidence replaces fear.

Building Transparency: The Technical + Ethical Approach

Building transparent AI isn’t just about code — it’s about philosophy.

Here are five pillars of trustworthy AI systems:

- Explainable AI (XAI) – Algorithms that explain their reasoning.

- Open Model Reporting – Companies publish transparency reports on training data, limitations, and purpose.

- Ethical Guidelines – Boards ensure fairness and human rights protection.

- Human Oversight – Humans approve critical decisions.

- Public Education – Teaching AI literacy globally, from schools to workplaces.

When these principles are applied, AI shifts from mystery to clarity.

Real-World Examples of AI Transparency and Control

Transparency isn’t theory — it’s already happening in many industries:

🔬 Healthcare

AI tools that analyze X-rays or detect cancer must explain their findings to doctors. Trust grows when doctors can verify the AI’s logic.

💼 Recruitment

AI hiring tools now use bias detection systems to ensure fair evaluation across gender, race, and age.

💳 Finance

Banks using AI for loans and fraud detection must legally explain automated decisions to customers.

📱 Everyday Apps

Chatbots, translators, and content models like ChatGPT or Gemini now include citations, transparency notes, and disclaimers — small steps that make a big difference.

The Psychology of AI Trust

Trust isn’t only logical — it’s emotional.

People trust AI systems that are:

✅ Consistent

✅ Predictable

✅ Honest about their limits

✅ Empathetic

When an AI says, “I’m not sure, please verify,” users feel safer.

That human-like honesty builds confidence.

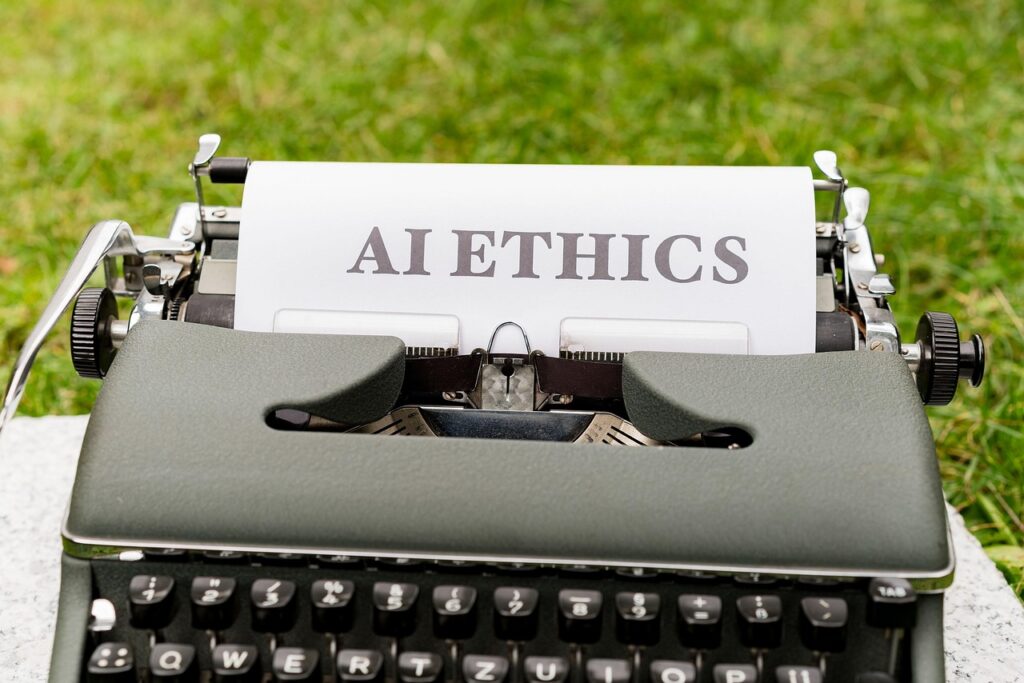

Global Efforts Toward Responsible AI

Governments and organizations worldwide are realizing the importance of AI ethics.

Notable initiatives include:

- UNESCO AI Ethics Recommendation – Promotes fairness and accountability (adopted by nearly 200 nations).

- OECD AI Principles – Global guidelines for responsible AI.

- AI Safety Institutes (U.S., U.K., Asia) – Researching safe, explainable systems.

Tech companies are also collaborating to ensure AI remains transparent and secure.

The global message is clear: Trustworthy AI is everyone’s responsibility.

How You Can Build AI Awareness

You don’t have to be a tech expert to use AI wisely. Start with small steps:

- Ask Questions – How did the AI create this result?

- Verify – Cross-check AI outputs with credible sources.

- Learn Basics – Understand simple AI terms like training data and bias.

- Choose Transparent Tools – Prefer apps that show sources or model details.

- Stay Curious – Explore AI, don’t fear it.

Awareness is protection. The more you know, the smarter your use of AI becomes.

The Future: From Smart Machines to Trustworthy Partners

In the coming decade, AI will power education, healthcare, transport, and creativity.

But progress will mean nothing without trust.

When transparency, control, and ethics align — AI stops being a black box and becomes a glass box — intelligent yet understandable.

That’s the kind of human-centered technology the world truly needs.

The Real Intelligence Is Shared Trust

AI’s future isn’t just about how smart machines become — it’s about how trustworthy they remain.

✅ Transparency builds understanding

✅ Human control ensures accountability

✅ Trust keeps humans and technology aligned

The more we open the black box, the closer we get to a future where AI serves humanity — ethically, intelligently, and transparently.

Written by Aieversoft Editorial Team

Exploring the bridge between AI innovation and human values.

FAQs

1. Are humans more trustworthy than AI?

Humans bring emotional intelligence, experience, and moral judgment, which makes them trustworthy in situations requiring empathy or complex decision-making. However, they may also be biased or inconsistent. AI is trustworthy for data-heavy, repetitive, and logical tasks but lacks human reasoning and ethical understanding.

2. Can AI make unbiased decisions?

AI can reduce human bias, but it is not completely bias-free. AI models learn from human-created data, so any bias in the data can influence AI’s output. With proper training, monitoring, and diverse datasets, AI can be made more objective than humans in many cases.

3. Should I rely more on AI or humans for important decisions?

For decisions requiring emotional understanding, ethics, and situational sensitivity—trust humans.

For data analysis, pattern detection, speed, and accuracy—trust AI.

Most smart decisions today come from humans and AI working together.

4. Can AI replace human judgment?

No. AI can enhance judgment but cannot replace human morality, intuition, or contextual understanding. AI handles “what the data says,” while humans decide “why it matters.”

5. Is AI safe to trust with personal information?

AI tools can be safe if they follow strong privacy, encryption, and data-handling standards. But users must check the platform’s security policies and avoid oversharing personal or sensitive information.

6. Will AI take over human jobs completely?

AI will automate routine tasks, but it will also create new roles in creativity, supervision, strategy, and problem-solving. The future workforce will be AI-assisted, not AI-replaced.

7. How can I decide when to trust AI?

Trust AI when:

- You need quick, accurate calculations

- You’re analyzing large datasets

- You want consistent results

Avoid relying solely on AI when: - Ethics or emotions are involved

- Real-world consequences are major

- Situations require human communication or empathy

8. Is AI better than humans in decision-making?

AI is better in areas requiring logic, data processing, and quantitative decision-making. Humans are better at creativity, emotional connection, conflict resolution, and moral reasoning. The “best” outcome usually comes from a hybrid approach.

9. Does AI always give the correct answer?

No. AI can make mistakes due to incorrect data, lack of context, or limitations in its training dataset. It should be used as a support tool, not a final authority.

10. What is the ideal balance between humans and AI?

The ideal balance is AI for speed and accuracy + humans for reasoning and empathy. This combination leads to smarter, fairer, and more reliable outcomes.